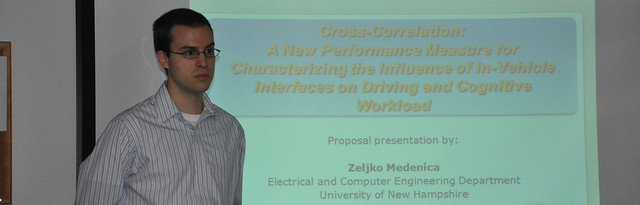

Last November Zeljko Medenica defended his dissertation [1]. Zeljko explored new performance measures that can be used to characterize interactions with in-vehicle devices. The impetus for this work came from our work with personal navigation devices. Specifically, in work published in 2009 [2] we found fairly large differences in the time drivers spend looking at the road ahead (more for voice-only turn-by-turn directions, less when there’s also a map displayed). However, the commonly used driving performance measures (average variance of lane position and steering wheel angle) did not indicate differences between these conditions. We thought that driving might still be affected, and Zeljko’s work confirms this hypothesis.

Zeljko is now with Nuance, working with Garrett Weinberg. Garrett and Zeljko collaborated during Zeljko’s internships at MERL (where Garrett worked prior to joining Nuance) in 2009 and 2010.

I would like to thank Zeljko’s committee for all of their contributions: Paul Green, Tim Paek, Tom Miller, and Nicholas Kirsch. Below is a photo of all of us after the defense. See more photos on Flickr.

- Tim Paek (left), Zeljko Medenica, Andrew Kun, Tom Miller, Nicholas Kirsch, and Paul Green (on the laptop)

References

[1] Zeljko Medenica, “Cross-Correlation Based Performance Measures for Characterizing the Influence of In-Vehicle Interfaces on Driving and Cognitive Workload,” Doctoral Dissertation, University of New Hampshire, 2012

[2] Andrew L. Kun, Tim Paek, Zeljko Medenica, Nemanja Memarovic, Oskar Palinko, “Glancing at Personal Navigation Devices Can Affect Driving: Experimental Results and Design Implications,” Automotive UI 2009