[flickr_set id=”72157687232855620″]

Tag Archives: conference

Coming up: CHI 2016 course on automotive user interfaces

At this year’s CHI conference Bastian Pfleging, Nora Broy and I will present a course introducing automotive user interfaces. Here’s the course abstract:

The objective of this course is to provide newcomers to Automotive User Interfaces with an introduction and overview of the field. The course will introduce the specifics and challenges of In-Vehicle User Interfaces that set this field apart from others. We will provide an overview of the specific requirements of AutomotiveUI, discuss the design of such interfaces, also with regard to standards and guidelines. We further outline how to evaluate interfaces in the car, discuss the challenges with upcoming automated driving and present trends and challenges in this domain.

Interested? Please register through the conference registration system and sign up for our course.

AutomotiveUI 2013: A brief report

At the end of October I was in Eindhoven for AutomotiveUI 2013. The conference was hosted by Jacques Terken, who along with his team did a splendid job: from the technical content, to the venue, to the banquet, and the invited demonstrations, everything was of high quality and ran smoothly. Thanks Jacques and colleagues!

The conference started with workshops, including our CLW 2013. The workshop was sponsored by Microsoft Research and it brought together over 30 participants. In addition to six contributed talks, the workshop featured two invited speakers. The keynote lecture was given by Klaus Bengler who discussed challenges with cooperative driving. Tuhin Diptiman discussed the implications of the 2013 NHTSA visual manual guidelines on the design of in-vehicle interfaces. Thanks Klaus and Tuhin for your engaging presentations! See pictures from CLW on Flickr.

The main conference included talks, posters and demos. Our group was productive: we had one talk and three posters. One of the memorable moments of the conference was the invited demos. I had a chance to ride in a TNO car using Cooperative Adaptive Cruise Control (CACC). See the video below (the driver and narrator is TNO researcher E. (Ellen) van Nunen – thanks Ellen!

See my pictures from the conference on Flickr.

LED Augmented Reality: Video Posted

During the 2012-2013 academic year I worked with a team of UNH ECE seniors to explore using an LED array as a low-cost heads-up display that would provide in-vehicle turn-by-turn navigation instructions. Our work will be published in the proceedings of AutomotiveUI 2013 [1]. Here’s the video introducing the experiment.

References

[1] Oskar Palinko, Andrew L. Kun, Zachary Cook, Adam Downey, Aaron Lecomte, Meredith Swanson, Tina Tomaszewski, “Towards Augmented Reality Navigation Using Affordable Technology,” AutomotiveUI 2013

2013 Microsoft Research Faculty Summit – Impressions

This week I attended the Microsoft Research Faculty Summit, an annual event held at MSR Redmond. The 2013 event gathered over 400 faculty from around the world. I was honored to receive an invitation, as these invitations are competitive: MSR researchers recommend faculty to invite, and a committee at MSR selects a subset who receive invitations.

This week I attended the Microsoft Research Faculty Summit, an annual event held at MSR Redmond. The 2013 event gathered over 400 faculty from around the world. I was honored to receive an invitation, as these invitations are competitive: MSR researchers recommend faculty to invite, and a committee at MSR selects a subset who receive invitations.

Below are some of my impressions from the event. But, before I go on, I first wanted to thank MSR researchers John Krumm, Ivan Tashev and Shamsi Iqbal for spending time with me at the summit. Thanks also to MSR’s Tim Paek, who has played a key role in a number of our studies at UNH.

Bill Gates inspires

Bill Gates was the opening keynote speaker. He discussed his work with the Gates Foundation and answered audience questions. One of the interesting things from the Q&A session was Bill’s proposed analogy that MOOCs are similar to recorded music: in the past there was much more live music, while today we primarily listen to recorded music. In the future live lectures might also become much less common and we might instead primarily listen to recorded lectures by the best lecturers. While this might sound scary to faculty, Bill points out that lectures are just one part of a faculty member’s education-related efforts. Others include work in labs, study sessions, and discussions.

MSR is a uniquely open industry lab

While MSR is only about 1% of Microsoft, it spends as much on computing research as the NSF. And most importantly, as Peter Lee, Corporate VP MSR, pointed out, MSR researchers publish, and in general conduct their work in an open fashion. MSR also sets its own course independently, even of Microsoft proper.

Microsoft supports women in computing

The Faculty Summit featured a session on best practices in promoting computing disciplines to women. One suggestion that stuck with me is that organizations (e.g. academic departments) should track their efforts and outcomes. Once you start tracking, and is creating a paper trail, things will start to change.

Moore’s law is almost dead (and will be by 2025)

Doug Burger, Director of Client and Cloud Applications in Microsoft Research’s Extreme Computing Group, pointed out that we cannot keep increasing computational power by reducing transistor size, as our transistors are becoming atom-thin. There’s a need for new approaches. One possible direction is to customize hardware: e.g. if we only need 20 bits for a particular operation, why implement the logic with 32?

The Lab of Things is a great tool for ubicomp research

Are you planning a field experiment in which you expect to collect data from electronic devices in the home? Check out the Lab of Things (LoT), it’s really promising. It allows you to quickly deploy your system, monitor system activity from the cloud, and log data in the cloud. Here’s a video introducing the LoT:

Seattle and the surrounding area is beautiful

I really like Seattle, with the Space Needle, the lakes, the UW campus, Mount Rainier and all of the summer sunshine.

Pictures and videos

See pictures from the 2013 Microsoft Research Faculty Summit and from Seattle on Flickr. Videos of talks and sessions are available at the Virtual Faculty Summit 2013 website.

CLW 2012 report

Background

The 2012 Cognitive Load and In-Vehicle Human-Machine Interaction workshop (CLW 2012) was held in conjunction with AutomotiveUI 2012 in Portsmouth, NH. The workshop was the second CLW in a row – CLW 2011 was held at AutomotiveUI 2011 in Salzburg, Austria.

Overview

Over 35 people from government, industry and academia attended CLW 2012.

For CLW 2012 the organizers made the decision to involve a large number of experts in the workshop, instead of only including contributions by authors responding to our CFP. Thus, the CLW 2012 program included three expert presentations, as well as a government-industry panel with four participants. Each of these expert participants discussed unique aspects of estimating and utilizing cognitive load for the design and deployment of in-vehicle human-machine interfaces.

Bryan Reimer opened the expert presentations with a discussion of the relationship between driver distraction and cognitive load. Next, Bruce Mehler discussed practical issues in estimating cognitive load from physiological measures. Finally, Paul Green discussed how cognitive load measures might fit in with the NHTSA visual-manual guidelines.

The expert presentations were followed by a government-industry panel. Chris Monk (Human Factors Division Chief at NHTSA) presented the NHTSA perspective on cognitive load and HMI design. Jim Foley (Toyota Technical Center, USA) introduced the OEM perspective. Scott Pennock (QNX & ITU-T Focus Group on Driver Distraction) introduced issues related to standardization. Garrett Weinberg (Nuance) focused on issues related to voice user interfaces.

Following these presentations, and the accompanying lively discussions, workshop participants viewed eight posters.

Evaluation

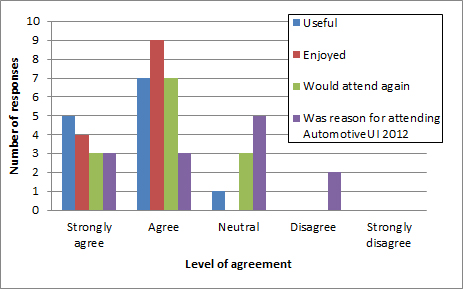

At the end of the workshop we asked participants to indicate their level of agreement with these four statements:

- I found the workshop to be useful.

- I enjoyed the workshop.

- I would attend a similar workshop at a future AutomotiveUI conference.

- This workshop is the reason I am attending AutomotiveUI 2011.

The responses of 13 participants are shown below (the workshop organizers in attendance did not complete the questionnaire). They indicate that the workshop was a success.

Next steps

Since the conclusion of CLW 2012 co-organizers Peter Froehlich and Andrew Kun joined forces with Susanne Boll and Jim Foley to organize a workshop at CHI 2013 on automotive user interfaces. Also, a proposal for CLW 2013 at AutomotiveUI 2013 is in the works.

Thank you presenters and participants!

The organizers would like to extend our warmest appreciation to all of the presenters for the work that went into the expert presentations, the panel discussion, and the poster papers and presentations. We would also like to thank all of the workshop attendees for raising questions, discussing posters, and sharing their knowledge and expertise.

You can see more pictures from CLW 2012 on Flickr.

This report is also available on the CLW website.

AutomotiveUI 2012 report published in IEEE Pervasive Computing

The report on the AutomotiveUI 2012 conference, co-authored by Linda Boyle, Bryan Reimer, Andreas Riener and me, was recently published by IEEE Pervasive Computing [1]. The reference on this page points to my final version of the paper. You can also download the paper with the published layout directly from the IEEE here.

References

[1] Andrew L. Kun, Linda Ng Boyle, Bryan Reimer, Andreas Riener, “AutomotiveUI: Interacting with technology in vehicles,” IEEE Pervasive Computing, April-June 2103

Special interest session on cognitive load at the 2012 ITS World Congress

This year Peter Froehlich and I co-organized a special interest session on cognitive load at the 2012 ITS World Congress. The session featured six experts in the field (in alphabetical order):

- Corinne Brusque, Director, IFSTTAR LESCOT, France

- Johan Engström, senior project manager, Volvo Technology, Sweden

- James Foley, Senior Principal Engineer, CSRC, Toyota, USA

- Chris Monk, Project Officer, US DOT

- Kazumoto Morita, Senior Researcher, National Safety and Environment Laboratory, Japan

- Scott Pennock, Chairman of the ITU-T Focus Group on Driver Distraction and Senior Hands-Free Standards Specialist at QNX, Canada

Peter posted a nice overview of the session, which also includes the presentation slides.

Announced: Cognitive load and in-vehicle HMI special interest session at the 2012 ITS World Congress

Continuing the work of the 2011 Cognitive Load and In-vehicle Human-Machine Interaction workshop at AutomotiveUI 2011, Peter Fröhlich and I are co-organizing a special interest session on this topic at this year’s ITS World Congress.

Continuing the work of the 2011 Cognitive Load and In-vehicle Human-Machine Interaction workshop at AutomotiveUI 2011, Peter Fröhlich and I are co-organizing a special interest session on this topic at this year’s ITS World Congress.

The session will be held on Friday, October 26, 2012. Peter and I were able to secure the participation of an impressive list of panelists. They are (in alphabetical order):

- Corinne Brusque, Director, IFSTTAR LESCOT, France

- Johan Engström, senior project manager, Volvo Technology, Sweden

- James Foley, Senior Principal Engineer, CSRC, Toyota, USA

- Chris Monk, Project Officer, US DOT

- Kazumoto Morita, Senior Researcher, National Safety and Environment Laboratory, Japan

- Scott Pennock, Chairman of the ITU-T Focus Group on Driver Distraction and Senior Hands-Free Standards Specialist at QNX, Canada

The session will be moderated by Peter Fröhlich. We hope that the session will provide a compressed update on the state-of-the-art in cognitive load research, and that it will serve as inspiration for future work in this field.

Video calling while driving? Not a good idea.

Do you own a smart phone? If yes, you’re likely to have tried video calling (e.g. with Skype or FaceTime). Video calling is an exciting technology, but as Zeljko Medenica and I show in our CHI 2012 Work-in-Progress paper [1], it’s not a technology you should use while driving.

Zeljko and I conducted a driving simulator experiment in which a driver and another participant were given the verbal task of playing the game of Taboo. The driver and the passenger were in separate rooms and spoke to each other over headsets. In one experimental condition, the driver and the other participant could also see each other as shown in the figure below. We wanted to find out if in this condition drivers would spend a significant amount of time looking at the other participant. This is an important question, as time spent looking at the other participant is time not spent looking at the road ahead!

We found that, when drivers felt that the driving task was demanding, they focused on the road ahead. However, when they perceived the driving task to be less demanding they looked at the other participant significantly more.

What this tells us is that, under certain circumstances, drivers are willing to engage in video calls. This is due, at least in part, to the (western) social norm of looking at the person you’re talking to. These results should serve as a warning to interface designers, lawmakers (yes, there’s concern [2]), transportation officials, and drivers that video calling can be a serious distraction from driving.

Here’s a video that introduces the experiment in more detail:

References

[1] Andrew L. Kun, Zeljko Medenica, “Video Call, or Not, that is the Question,” to appear in CHI ’12 Extended Abstracts

[2] Claude Brodesser-Akner, “State Assemblyman: Ban iPhone4 Video-Calling From the Road,” New York Magazine. Date accessed 03/02/2012