On November 11 and 12 I was at the AutomotiveUI 2010 conference serving as program co-chair with Susanne Boll. The conference was hosted by Anind Dey at CMU and co-chaired by Albrecht Schmidt.

The conference was successful and really fun. I could go on about all the great papers and posters (including two posters from our group at UNH [1,2]) but in this post I’ll only mention two: John Krumm’s keynote talk and, selfishly, my own talk (this is my blog after all). John gave an overview of his work with data from GPS sensors. He discussed work on prediction of where people will go, his experiences with location privacy and with creating road maps. Given that John is, according to his own website, the “all seeing, all knowing, master of time, space, and dimension,” this was indeed a very informative talk 😉 OK in all seriousness, the talk was excellent. I find John’s work on prediction of people’s destination and selected route the most interesting. One really interesting effect of having accurate predictions, and people sharing such data in the cloud, would be on routing algorithms hosted in the cloud. If such an algorithm could know where all of us are going at any instant of time, it could propose routes that overall allow efficient use of roads, reduced pollution, etc.

The conference was successful and really fun. I could go on about all the great papers and posters (including two posters from our group at UNH [1,2]) but in this post I’ll only mention two: John Krumm’s keynote talk and, selfishly, my own talk (this is my blog after all). John gave an overview of his work with data from GPS sensors. He discussed work on prediction of where people will go, his experiences with location privacy and with creating road maps. Given that John is, according to his own website, the “all seeing, all knowing, master of time, space, and dimension,” this was indeed a very informative talk 😉 OK in all seriousness, the talk was excellent. I find John’s work on prediction of people’s destination and selected route the most interesting. One really interesting effect of having accurate predictions, and people sharing such data in the cloud, would be on routing algorithms hosted in the cloud. If such an algorithm could know where all of us are going at any instant of time, it could propose routes that overall allow efficient use of roads, reduced pollution, etc.

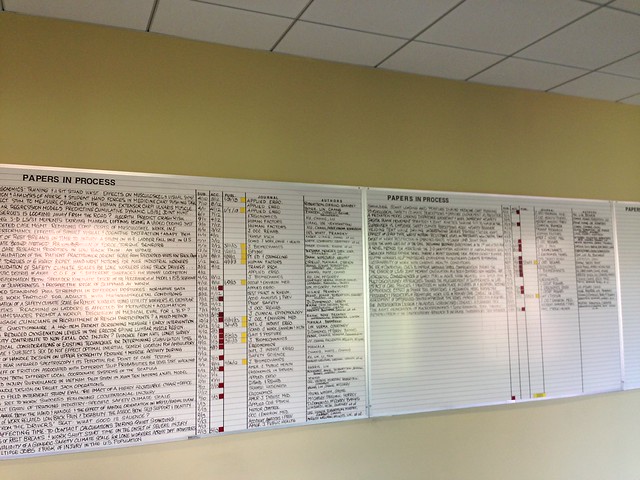

My talk focused on collaborative work with Alex Shyrokov and Peter Heeman on multi-threaded dialogues. Specifically, I talked about designing spoken tasks for human-human dialogue experiments for Alex’s PhD work [3]. Alex wanted to observe how pairs of subjects switch between two dialogue threads, while one of the subjects is also engaged in operating a simulated vehicle. Our hypothesis is that observed human-human dialogue behaviors can be used as the starting point for designing computer dialogue behaviors for in-car spoken dialogue systems. One of the suggestions we put forth in the paper is that the tasks for human-human experiments should be engaging. These are the types of tasks that will result in interesting dialogue behaviors and can thus teach us something about how humans manage multi-threaded dialogues.

Next year the conference moves back to Europe. The host will be Manfred Tscheligi in Salzburg, Austria. Judging by the number of submissions this year and the quality of the conference, we can look forward to many interesting papers next year, both from industry and from academia. Also, the location will be excellent – just think Mozart, Sound of Music (see what Rick Steves has to say), and world-renowned Christmas markets!

References

[1] Zeljko Medenica, Andrew L. Kun, Tim Paek, Oskar Palinko, “Comparing Augmented Reality and Street View Navigation,” AutomotiveUI 2010 Adjunct Proceedings

[2] Oskar Palinko, Sahil Goyal, Andrew L. Kun, “A Pilot Study of the Influence of Illumination and Cognitive Load on Pupil Diameter in a Driving Simulator,” AutomotiveUI 2010 Adjunct Proceedings

[3] Andrew L. Kun, Alexander Shyrokov, Peter A. Heeman, “Spoken Tasks for Human-Human Experiments: Towards In-Car Speech User Interfaces for Multi-Threaded Dialogue,” AutomotiveUI 2010