Do you own a smart phone? If yes, you’re likely to have tried video calling (e.g. with Skype or FaceTime). Video calling is an exciting technology, but as Zeljko Medenica and I show in our CHI 2012 Work-in-Progress paper [1], it’s not a technology you should use while driving.

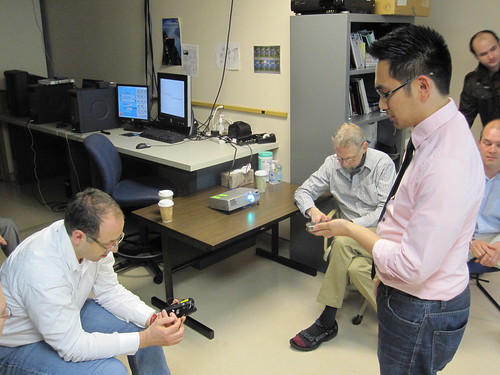

Zeljko and I conducted a driving simulator experiment in which a driver and another participant were given the verbal task of playing the game of Taboo. The driver and the passenger were in separate rooms and spoke to each other over headsets. In one experimental condition, the driver and the other participant could also see each other as shown in the figure below. We wanted to find out if in this condition drivers would spend a significant amount of time looking at the other participant. This is an important question, as time spent looking at the other participant is time not spent looking at the road ahead!

We found that, when drivers felt that the driving task was demanding, they focused on the road ahead. However, when they perceived the driving task to be less demanding they looked at the other participant significantly more.

What this tells us is that, under certain circumstances, drivers are willing to engage in video calls. This is due, at least in part, to the (western) social norm of looking at the person you’re talking to. These results should serve as a warning to interface designers, lawmakers (yes, there’s concern [2]), transportation officials, and drivers that video calling can be a serious distraction from driving.

Here’s a video that introduces the experiment in more detail:

References

[1] Andrew L. Kun, Zeljko Medenica, “Video Call, or Not, that is the Question,” to appear in CHI ’12 Extended Abstracts

[2] Claude Brodesser-Akner, “State Assemblyman: Ban iPhone4 Video-Calling From the Road,” New York Magazine. Date accessed 03/02/2012

I also had a chance to give a talk reviewing some of the results of my collaboration with

I also had a chance to give a talk reviewing some of the results of my collaboration with